1. Treating vulnerable people as medical test subjects

In the 1940s and 1950s, medical experiments were often carried out on prisoners, psychiatric patients, and orphaned children without proper consent. Researchers exposed subjects to radiation, diseases, and harmful drugs, believing they were advancing science and public health. Families often had little idea what was happening, and institutions treated this as routine. It was a disturbing reality that most Americans didn’t question at the time.

Historians now call this period a dark chapter in medical ethics. These experiments led to severe suffering and long-term health damage. Today, strict rules on informed consent and human rights prevent such actions. Understanding this history highlights how much medical ethics has evolved over time. Practices once labeled “normal research” would now be crimes.

2. Spraying bacteria and mosquitoes over cities for testing

In the 1950s, U.S. military researchers tested biological warfare methods by secretly spraying harmless bacteria over San Francisco. Residents inhaled unknown particles without being warned, and some fell ill. Another project released over 300,000 mosquitoes in Georgia to study insect dispersal. At the time, these tests were justified as necessary for national security and rarely questioned by the public.

Experts today call these experiments unethical and reckless. Citizens were never given the choice to avoid exposure or know potential risks. Documents declassified decades later showed how far Cold War fears pushed science beyond ethical boundaries. The experiments helped shape biological warfare knowledge but violated trust and safety. Modern rules on human testing emerged partly from outrage over these covert programs.

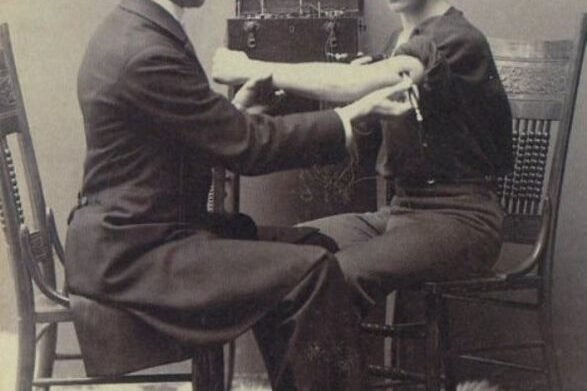

3. Trying shocking stockings to deter mice

In 1941, an inventors’ expo in Los Angeles featured an odd creation: copper mesh stockings wired to deliver a small electric jolt to any mouse that tried to climb a leg. It was presented as a futuristic household gadget. Attendees found it quirky but feasible, reflecting a time when wild inventions were celebrated rather than ridiculed. The idea was treated as clever modern thinking, not bizarre or dangerous.

Historians note that mid-century America loved novelty gadgets, believing technology could solve any domestic problem. The shocking stockings never became a household staple, yet it wasn’t considered absurd then. Today, the invention seems more like a comedy prop than real innovation. It highlights how open people once were to untested ideas if they promised futuristic convenience.

4. Wearing extravagant zoot suits during fabric rationing

World War II rationing restricted fabric use, yet some young men wore oversized zoot suits with wide legs and long jackets, defying rules on cloth conservation. Authorities condemned the style as unpatriotic, but many saw it as a powerful cultural statement, particularly in minority communities. Wearing these suits was considered bold, rebellious, yet normal within certain circles despite nationwide rationing campaigns.

Fashion historians describe zoot suits as symbols of identity, resistance, and pride. While critics labeled them wasteful, wearers celebrated the look as self-expression. The style led to clashes like the 1943 Zoot Suit Riots, where discrimination against wearers turned violent. Today, the suits are remembered as both fashion and protest. They show how clothing once challenged authority during a time of strict social control.

5. Portraying married couples in twin beds on television

In the 1950s, U.S. television showed nearly every married couple sleeping in separate twin beds. Strict censorship rules, known as the Hays Code, banned depictions of couples in the same bed, claiming it preserved decency. Families watching didn’t find it strange. It became a normal visual shorthand for a wholesome marriage in every sitcom of the decade.

Media experts explain that these depictions reflected conservative attitudes toward intimacy. Networks feared showing couples sharing a bed would scandalize viewers. Today, the practice feels funny and unrealistic, but at the time it went unquestioned. This censorship rule lasted for years, shaping what Americans thought of as “proper” on-screen relationships.

6. Experimenting with truth serums on unsuspecting personnel

In the late 1940s, the U.S. Navy ran Project Chatter, testing drugs like LSD and scopolamine on sailors and prisoners to explore potential truth serums. Many subjects weren’t informed of the real risks or side effects. Military leaders considered these tests groundbreaking research rather than harmful experimentation. At the time, secrecy and science excused questionable ethics.

Today, experts classify these trials as human rights violations. Many participants suffered mental health problems afterward. The project later merged with other covert programs like MKUltra, which also tested psychoactive drugs. These studies highlight a period when scientific ambition and national security often outweighed individual safety and informed consent

7. Infecting prisoners with malaria to test treatments

During the 1940s, researchers from the U.S. Army and University of Chicago infected prisoners at Stateville Penitentiary with malaria to test new drugs. The prisoners volunteered in exchange for shorter sentences, but many didn’t fully understand the risks. This was considered normal medical research for the war effort. Authorities praised the study as serving humanity despite its coercive nature.

Modern ethicists argue that offering freedom in exchange for disease exposure removes true consent. The study did advance malaria treatment, but it raised serious moral concerns. It’s now cited as a turning point that influenced medical ethics standards worldwide. Experiments like this remind us how easily vulnerable populations were used in the name of progress.

8. Testing psychochemical warfare on soldiers

Between 1956 and 1975, the U.S. Army tested LSD, BZ, and other chemicals on active-duty soldiers at Edgewood Arsenal. Officials wanted to find non-lethal agents for warfare, believing temporary incapacitation was safer than traditional weapons. Many soldiers weren’t fully informed about potential side effects, and experiments continued under strict secrecy. At the time, this was framed as standard defense research for national security.

Historians now view these programs as unethical and risky. Soldiers reported psychological trauma and health issues for years after. Some were unable to seek justice because records were classified. Experts say these tests highlight a Cold War mindset where military objectives outweighed personal safety. Today, international agreements strictly regulate chemical warfare research, showing how attitudes toward human testing have drastically shifted.

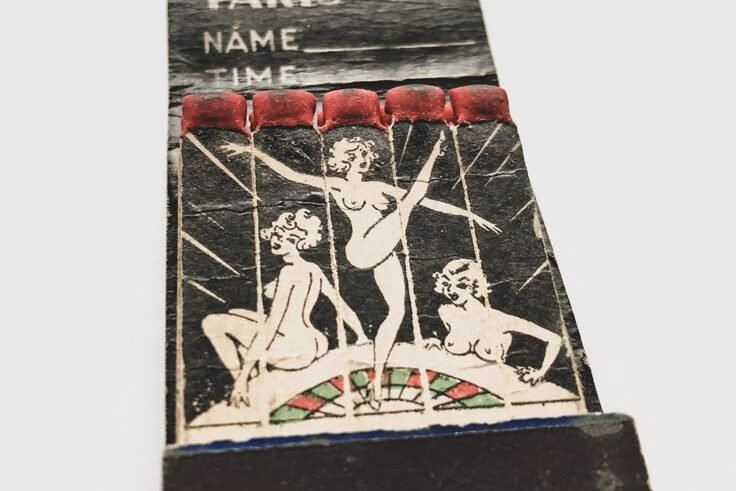

9. Publishing comic matchbooks with sexist humor

In the 1950s, matchbooks were common collectibles, often printed with lighthearted jokes about marriage. Many portrayed wives as nagging or husbands as lazy, making fun of domestic life. These jokes were accepted entertainment, handed out freely at diners and gas stations. People shared them without thinking they might harmfully reinforce stereotypes. Humor that feels crude today was seen as socially normal then.

Cultural analysts explain that these small items reflected broader attitudes about gender roles in mid-century America. Advertising used humor to cement ideas of women as housekeepers and men as breadwinners. It took decades for society to question this portrayal. Today, such jokes would be criticized for perpetuating bias. These matchbooks remind us how casual humor once spread outdated ideas through everyday objects people barely noticed.

10. Serving unpasteurized milk to children in schools

During the 1940s and early 1950s, many schools in the U.S. served raw milk to children at lunchtime. Parents and school boards believed fresh milk straight from the farm was the healthiest option. At that time, safety standards were inconsistent, and pasteurization wasn’t mandatory in every state. Drinking unprocessed milk was simply part of everyday childhood nutrition.

Public health studies later connected raw milk to tuberculosis, salmonella, and brucellosis outbreaks among children. Laws changed to enforce pasteurization in schools and public cafeterias, making milk much safer. Today, serving raw milk to kids would be unacceptable in most places. This shift shows how food safety practices evolved, moving from tradition-based decisions to science-backed health protections over time. It’s a reminder of how knowledge has reshaped basic school lunch habits for future generations.

11. Using DDT inside homes to kill bugs

In the late 1940s and early 1950s, families commonly sprayed DDT indoors to fight pests. Advertisements called it a miracle chemical, safe enough for bedrooms and nurseries. Parents dusted it on mattresses, curtains, and even children’s clothing. Few questioned the risks because science and government endorsed its use. It felt like modern living to rely on strong chemicals.

Decades later, research revealed DDT’s links to cancer, infertility, and environmental harm, including collapsing bird populations. Public campaigns in the 1960s eventually led to a residential ban. Today, using such chemicals indoors would spark outrage. The shift from unquestioning trust to strict regulation shows how limited information once shaped household safety habits. People truly believed they were protecting their families when, in fact, they were unknowingly exposed to danger.

12. Letting children ride unrestrained in cars

In the 1940s and 1950s, most cars lacked seat belts, and child safety seats were virtually unknown. Parents let kids sit on laps, stand in back seats, or lie down during long drives. Crashes were often deadly for children, yet few questioned these practices. Car travel felt safe, and restraint devices were not seen as necessary for everyday trips.

Automotive research in the 1960s revealed the life-saving benefits of seat belts and proper car seats. Laws eventually required restraints, but some families resisted at first. Today, not buckling a child is illegal and considered reckless parenting. This change shows how cultural norms around safety can shift rapidly when backed by evidence and advocacy. What once seemed harmless now feels shocking, a reminder of how progress transforms basic habits on the road.

13. Wearing makeup with toxic ingredients

In the 1940s, many cosmetic products contained toxic substances like lead, mercury, and arsenic. Women used these daily, trusting marketing claims of beauty and skin improvement. Regulations were weak, and companies faced no obligation to list harmful ingredients. Wearing makeup with poisons was normal because people didn’t know better, relying on brands to keep them safe.

Over time, medical research linked these chemicals to serious health problems, from lead poisoning to skin disorders. Governments eventually tightened laws and banned hazardous ingredients. Today, such products would never reach the shelves. The shift shows how little oversight once existed in personal care and how much progress has been made. Mid-century beauty routines remind us that trust in consumer goods often came before safety, leaving women exposed to unseen dangers.

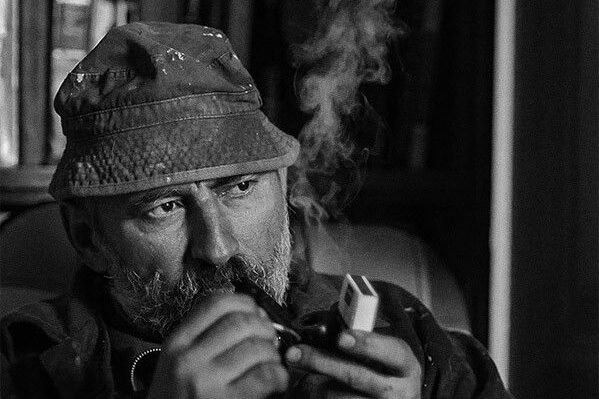

14. Smoking indoors almost everywhere

In mid-century America, smoking was a universal habit, permitted in restaurants, offices, airplanes, and even hospitals. Cigarettes were advertised as stress relievers and symbols of sophistication. People smoked around children and nonsmokers without hesitation. It was ordinary social behavior that no one challenged, and many workplaces offered ashtrays on every desk.

Scientific studies later exposed strong links between smoking, secondhand smoke, cancer, and heart disease. Gradually, public campaigns shifted opinion, leading to indoor smoking bans in most public spaces. Today, lighting a cigarette in a hospital or on a plane would be unthinkable. The drastic change in smoking norms shows how long it can take for health evidence to outweigh ingrained habits, even when those habits put millions of people at risk every day.